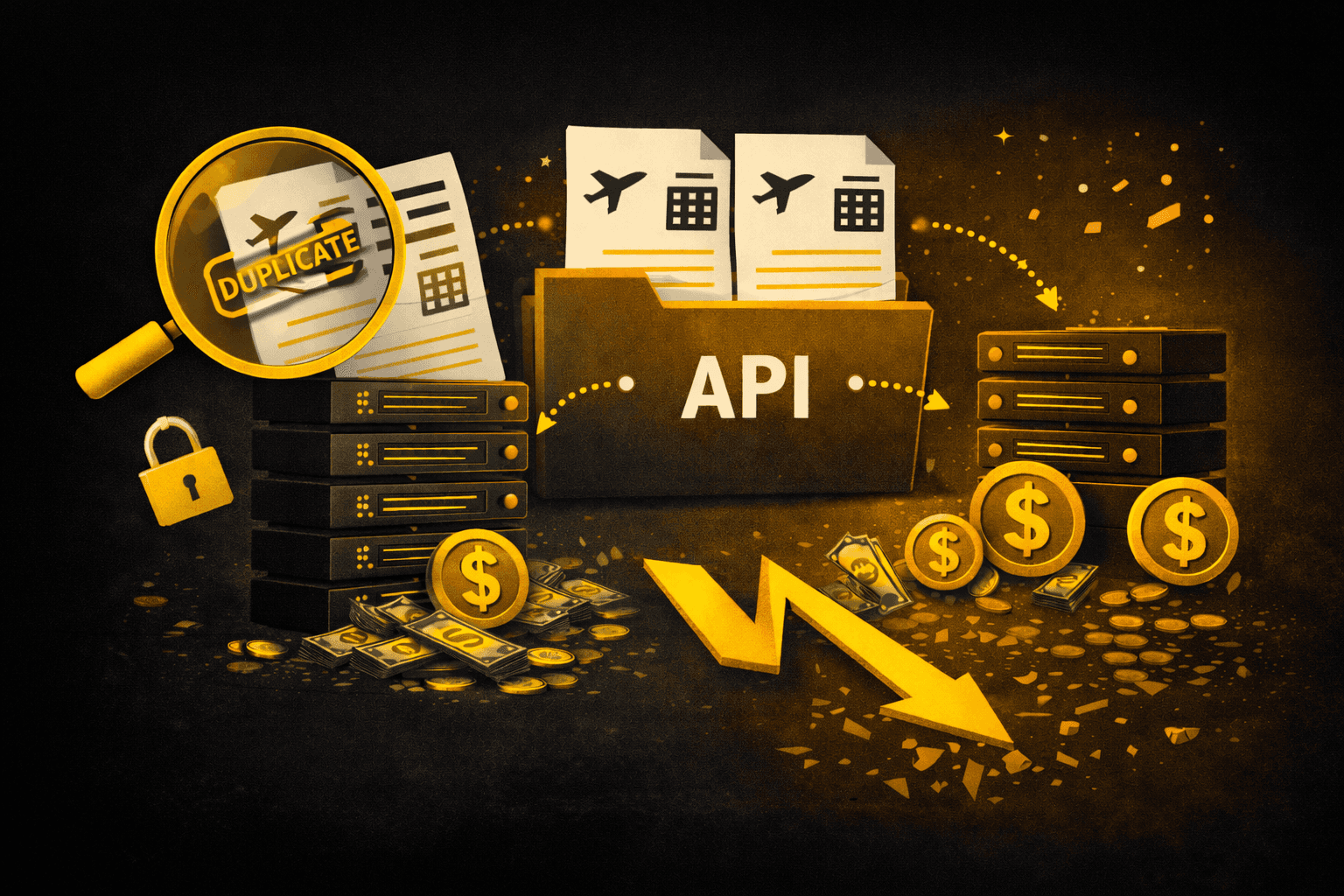

The Hidden Cost of Duplicate Content in Travel APIs

Most travel platforms measure API performance by counting properties. 50,000 hotels sounds better than 30,000. 200,000 sounds even better.

The number is easy to communicate. Easy to compare. Easy to sell.

It's also misleading.

A platform with 200,000 hotel listings might only have 120,000 actual properties. The rest? Duplicates. Same hotel, different supplier codes. Same address, different property names. Same room, priced five different ways through five different channels.

The difference between property count and clean property count becomes a tax on everything downstream.

Where Duplicates Come From

Travel APIs aggregate inventory from multiple sources. Global suppliers, regional DMCs, direct hotel connections, third-party consolidators. Each source has its own way of representing the same property.

Supplier A lists "Marriott Marquis New York" with property code NYC_MAR_001.

Supplier B lists "New York Marriott Marquis" with property code MARR-NYC-4312.

Supplier C lists "Marriott Marquis Times Square" with property code 8475-MAR.

Same hotel. Three entries in your system.

Multiply this across tens of thousands of properties and dozens of suppliers, and the duplication compounds. Without content mapping, your 200,000 listings might represent 120,000 actual hotels.

This isn't a data hygiene issue. It's a structural problem that affects conversion, user experience, and platform economics.

The User Experience Tax

A customer searches for hotels in Bangkok. Your API returns 842 results.

Except it's not 842 hotels. It's maybe 600 hotels, with 242 duplicate listings scattered throughout the results.

The Shangri-La Bangkok appears in position 12, position 47, and position 89. Same property, three different prices from three different suppliers. The user doesn't know they're looking at duplicates. They just see inconsistent pricing and confusing options.

What happens next is predictable.

Some users notice the duplication and lose trust. If you can't even deduplicate hotel listings, what else is broken?

Some users waste time comparing identical properties, thinking they're making an informed choice between different options.

Some users bounce because 842 results feels overwhelming and they're unsure which listings are legitimate.

Search interfaces compensate by hiding results. Recommendation engines struggle to differentiate between properties. Filters return misleading counts. Every layer of your product is working around a data problem that shouldn't exist.

The Algorithmic Penalty

Modern travel platforms don't just display lists. They rank, recommend, and personalize. Machine learning models optimize for conversion, user preferences, and revenue.

These systems depend on clean data.

When your training data contains duplicates, your models learn the wrong patterns. A hotel that appears three times in your database gets three times the booking signal, three times the user interaction data, three times the algorithmic weight.

Your recommendation engine thinks the duplicated properties are more popular than they actually are. Your pricing models can't accurately compare supplier performance. Your conversion optimization is built on noisy data.

You can try to fix this algorithmically. Add deduplication logic. Build fuzzy matching. Train models to recognize duplicates.

But now you're solving the wrong problem. You're building complexity to work around dirty data instead of fixing the data.

The Hidden Infrastructure Cost

Duplicate content doesn't just confuse users and algorithms. It makes every technical operation more expensive.

Search becomes slower because you're filtering 200,000 records instead of 120,000.

Caching becomes less effective because you're storing redundant data.

Real-time pricing queries multiply because you're checking availability across duplicate listings.

Database indexing degrades because your data model is bloated with unnecessary entries.

Mobile clients download larger response payloads, increasing latency and data costs.

Every API call, every database query, every cache operation is processing duplicates. The computational overhead compounds across millions of searches.

This isn't about optimization. It's about carrying technical debt that slows everything down.

The Commercial Reality

Duplicate content also distorts commercial decisions.

When evaluating supplier performance, how do you measure if a hotel appears through three different suppliers? If Supplier A provides property XYZ with good rates but Supplier B and C provide the same property with worse rates, your aggregate data shows the property underperforming.

You might deprioritize a high-performing hotel because half its listings come through poor-performing suppliers. You might keep paying for redundant supplier relationships because you can't isolate which supplier is actually converting.

Revenue attribution breaks. Commission tracking becomes ambiguous. Contract negotiations happen without accurate data.

What Content Mapping Actually Solves

Content mapping is the unglamorous work of linking duplicate listings to a single canonical property record.

When a new hotel is added from any supplier, the system checks: does this property already exist? It compares names, addresses, coordinates, amenity lists, photos. It uses deterministic rules and probabilistic matching to decide if two records represent the same place.

When duplicates are found, they're merged into a single property with multiple supplier relationships attached. Users see one hotel with the best available rate across all sources. Algorithms work with clean data. Infrastructure processes fewer records.

This seems straightforward. In practice, it's continuous work.

Hotels rebrand. Chains reorganize. Suppliers update codes. New suppliers enter your network with different data standards. Content mapping isn't a one-time task. It's infrastructure maintenance.

But the alternative is worse.

The Real Question

When evaluating travel APIs, the relevant question isn't "how many properties do you have?"

It's: "How many clean, deduplicated properties do you have, and what percentage of your listings are duplicates?"

Platforms that don't answer this question are carrying a hidden cost. It shows up in user experience. It shows up in infrastructure performance. It shows up in commercial clarity.

You can build on top of dirty data. Many platforms do. But you're building on a foundation that penalizes growth.

At a certain scale, duplication stops being an annoyance and becomes a structural constraint.

The platforms that scale cleanly are the ones that solve this problem early. Not because it's exciting work. Because it's necessary work.

Content quality isn't a feature. It's infrastructure.

And infrastructure matters most when you stop noticing it.